Humanity’s next ambitious mission is to land on the Moon and create a permanent base on the Earth’s satellite. At the same time, technologies are being developed that will make it possible to fly into deep space.

Leading countries are increasing their budgets to develop space technologies that will one day make it possible to fly to Mars and beyond. According to BIS Research, the size of this market will grow by more than 6% per year and reach $33.90 billion by 2030. Revolution Notes looked into what technologies would allow humanity to reach the solar system’s boundaries.

Super-heavy rockets with refueling

Space agencies are creating advanced rockets to transport manned missions into deep space. Thus, a heavy cargo ship called the Space Launch System (SLS) is being developed as part of NASA’s Artemis program. After a series of missions to the Moon, it will be able to be used for longer flights. Private players such as SpaceX and Virgin Galactic are also developing their rockets.

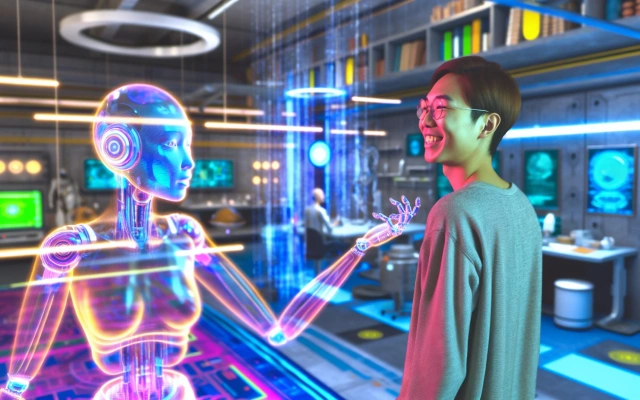

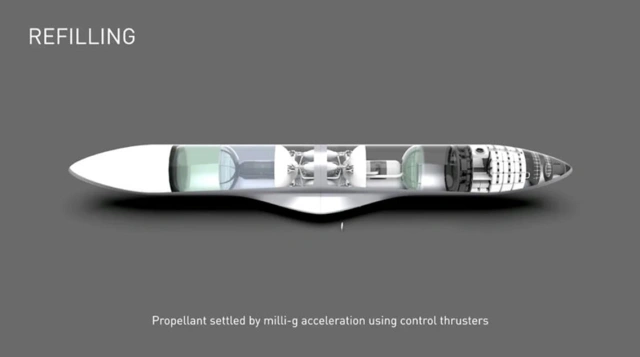

Elon Musk’s Starship super-heavy rocket is still being tested, but it has already been selected to carry astronauts to the Moon in 2026. Before that, SpaceX must not only demonstrate a successful launch but also prove that its Starship refueling system will operate reliably in space. It is assumed that versions of Starship modified for tankers will refuel the ship with its crew.

This will allow it to fly further than the Moon, including to Mars. “To achieve colonization of Mars within three decades, we need ship production to be 100 Starships per year, but ideally up to 300 per year,” Elon Musk said of the company’s goal.

At the same time, NASA announced that it would use Blue Origin’s New Glenn rocket to launch a new mission to Mars. A two-stage heavy rocket will deliver two Photon probes to Mars at once. This will happen either in August 2024 or at the end of 2026. As part of the Escape and Plasma Acceleration and Dynamics Explorer (ESCAPADE) mission, the probes will study the interaction of the solar wind with the magnetosphere of Mars.

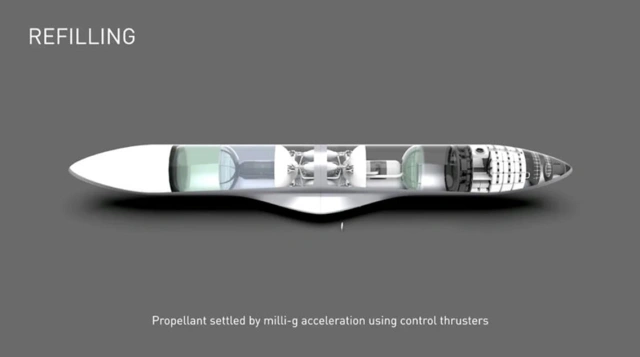

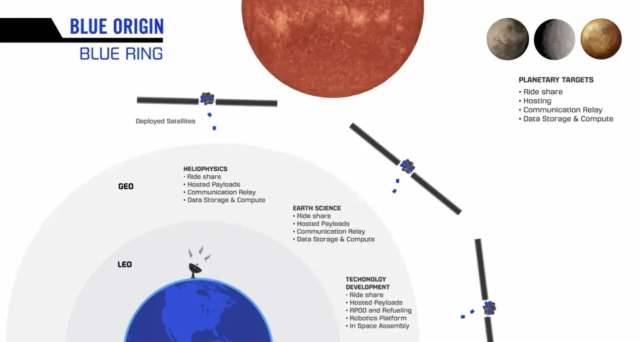

Blue Origin itself is working on the Blue Ring space tug project. It will be able to provide “space logistics and delivery” services from low-Earth orbit to cislunar space and beyond. In addition, the Blue Ring is refuelable and will allow it to deliver fuel to other spacecraft.

Nuclear and detonation engines

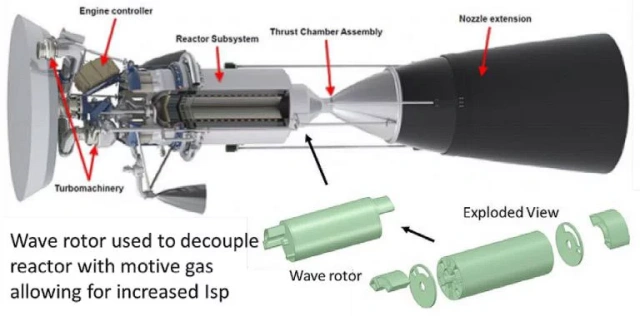

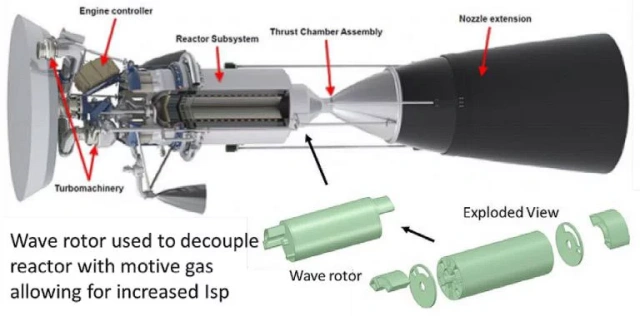

A few years ago, NASA revived its nuclear program to develop a facility that could reach Mars in 100 days. Thus, together with the US Defense Advanced Research Projects Agency, DARPA (Defense Advanced Research Projects Agency) is developing nuclear thermal propulsion (NTP) for fast transit missions to Mars.

In an NTP system, a nuclear reactor generates heat that causes hydrogen or deuterium fuel to expand and then directs it through nozzles to create thrust.

At the same time, in 2023, as part of the NASA Innovative Advanced Concepts (NIAC) program, the agency also selected a concept for the development of such an engine. Developed by University of Florida hypersonics program manager Ryan Gosse, the setup uses a “wave rotor excitation cycle” that could cut the flight time to Mars by up to 45 days.

Nuclear electric propulsion (NEP) relies on a nuclear reactor with an ion engine. It generates an electromagnetic field that ionizes and accelerates an inert gas (such as xenon) to create thrust.

NASA also funded the startup Positron Dynamics, which developed a nuclear fission engine (NFE). It uses hot nuclear fission products to create thrust. Now the authors of the project are working to redirect fragments of nuclear decay in one direction, providing the necessary thrust for rockets.

In the meantime, NASA is planning the first Dragonfly mission to test the operation of a nuclear-powered quadcopter on Saturn’s moon Titan. He will study the composition of the sand on Saturn’s moon for two years to find out whether it contains organic compounds.

Unable to harness solar energy in Titan’s hazy atmosphere, Dragonfly will be powered by a Multi-Mission Radioisotope Thermoelectric Generator (MMRTG). The mission is planned to launch in 2028.

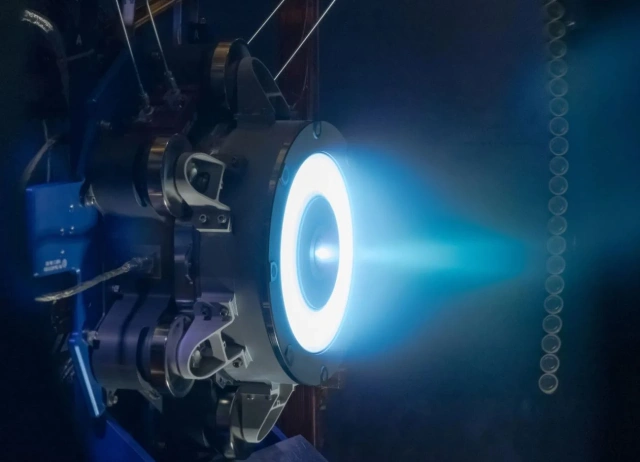

In addition, development of rotating detonation rocket engines (RDMs) is underway. These are installations that use one or more detonation waves that continuously propagate along an annular channel. During detonation, combustion products expand at supersonic speed, which saves fuel and increases the power of the installation.

In December 2023, engineers at NASA’s Marshall Space Flight Center successfully tested a 3D printed VDD. According to testers, its characteristics meet the requirements for operation in deep space, when the spacecraft can take a course from the Moon to Mars. According to them, this will allow the creation of lightweight propulsion systems to send more payload mass into deep space.

Light sails and lasers to speed up ships

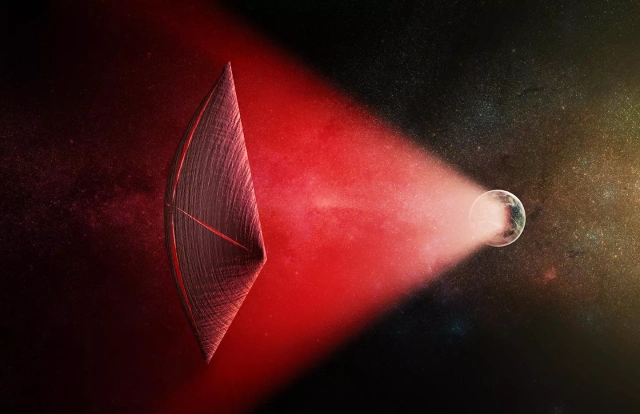

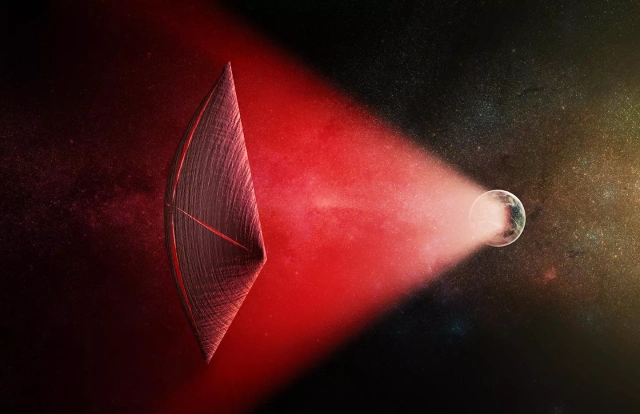

In order to send missions into deep space, we also need installations that can provide high thrust and constant acceleration of ships. Researchers are currently exploring the potential of focused arrays of lasers and light sails. Similar developments are being carried out by Breakthrough Starshot and Swarming Proxima Centauri.

In addition, a group from McGill University, at the request of NASA, has developed a 10-meter-wide laser that, on Earth, will heat hydrogen plasma in a chamber behind the spacecraft, creating thrust. It would also potentially allow missions to Mars to be sent in as little as 45 days.

The 100 MW Laser-Thermal Propulsion (LTP) installation will be able to provide energy to a spacecraft at a distance almost to the Moon. It heats the hydrogen fuel to a temperature of 10,000 K.

The lasers are focused into a hydrogen heating chamber, which is then discharged through a nozzle. Now the team is testing a more powerful setup. If the experiments are successful, deep space missions will take only a few weeks.

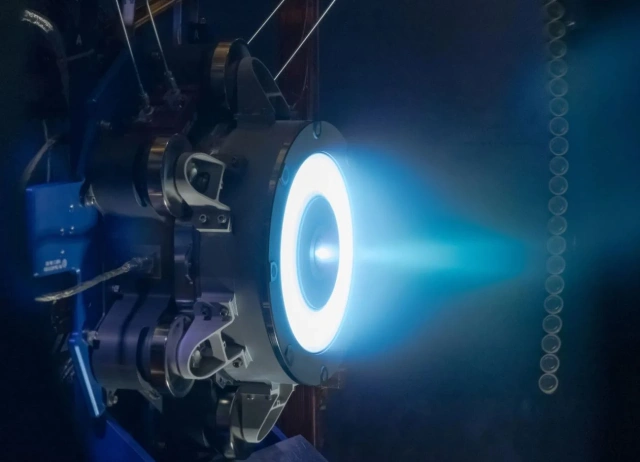

To support deep space missions, NASA is also working on a solar electric propulsion (SEP) project. The project is testing advanced technologies, including solar panels, high voltage power control and distribution systems, energy processing units and power system diagnostic systems. One of the latest developments being tested is a prototype of the Advanced Electric Propulsion System (AEPS).

This is a 12-kilowatt engine that uses a continuous stream of ionized xenon to create thrust. Such a system could potentially accelerate a spacecraft to extremely high speeds using relatively little fuel, allowing it to launch missions into deep space. The first three AEPS engines will be installed on the Gateway lunar station.

Communications and navigation in deep space

Before we start sending missions into deep space, we need to solve the problem of communication with them. In October 2023, NASA conducted the Deep Space Optical Communications (DSOC) experiment, which could change the way spacecraft communicate.

In the course of it, it was possible to send data using a laser to a distance greater than from the Earth to the Moon. As part of the experiment, a near-infrared laser beam was directed at the Hale Telescope at the Palomar Observatory of the California Institute of Technology in San Diego County from a distance of almost 16 million km. It contained encoded test data.

The DSOC system was placed on board the Psyche spacecraft. She transmitted the signal during the vehicle’s flight to the main asteroid belt between Mars and Jupiter. NASA notes that the laser is capable of transmitting data at a speed that is 10-100 times higher than the speed of radio wave systems, which are traditionally used in other missions.

As part of a demonstration of the system’s capabilities, the agency transmitted to Earth a photo from the constellation Pisces, as well as a 15-second video in high resolution. The maximum data transfer rate via the laser was 267 Mbit/s, the average was 62.5 Mbit/s. It took only 1.5 minutes to broadcast the video.

In February 2024, NASA also invited the private space industry to submit plans for missions to Mars, including deep space communications services. They must consider solutions for transmitting data to both ground-based and orbital stations.

In addition, the agency is trying to solve the problem of deep space navigation, which cannot be organized using traditional methods using satellites. In 2018, NASA developed Station Explorer autonomous navigation technology for X-ray timing and navigation technology (SEXTANT). It uses pulsars, or neutron stars, as guides.

Finally, NASA developed and tested an atomic clock for deep space (Deep Space Atomic Clock). They have already shown absolute accuracy , since they are only one second behind 10 thousand years.

In the future, this will make it possible to launch manned missions to the far corners of the solar system and beyond. An improved model of such an atomic clock (Deep Space Atomic Clock 2) is currently being developed. They plan to install them on the Veritas ship, which will go to Venus, create a complete topographic map of the planet and land

Space robotics

Space agencies are developing robotic landers that can explore other planets instead of humans or that will build habitable spaces for astronauts. NASA is working in partnership with US firms such as Astrobotic Technology, Masten Space Systems and Moon Express to develop lunar robotic modules.

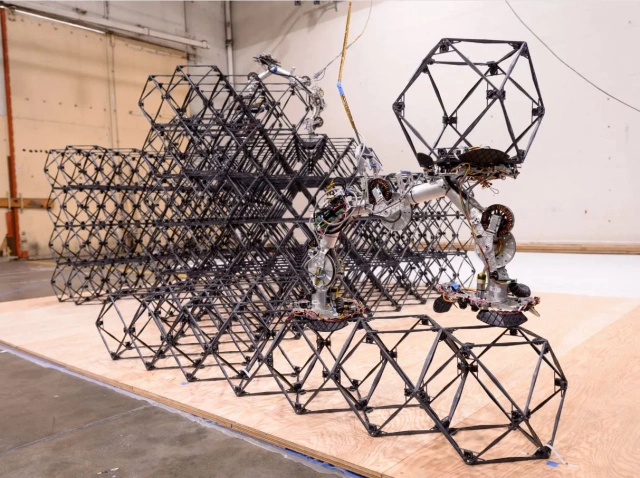

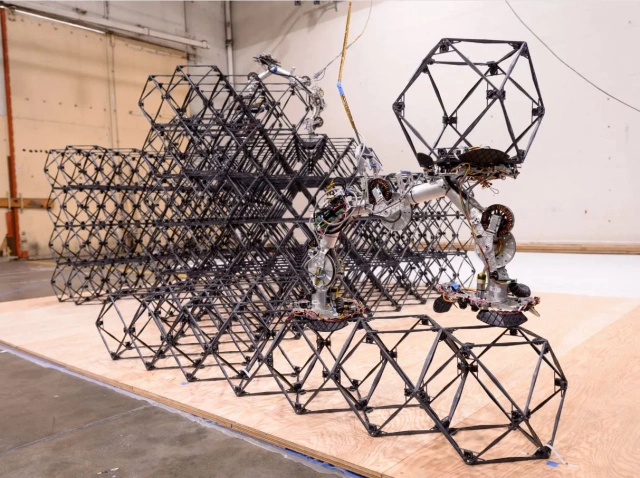

In addition, the US space agency in January 2024 tested the capabilities of the ARMADAS robotic system, which can independently assemble to create various structures on other planets. Potentially, this will allow the creation of various infrastructure elements, such as solar power plants, communication towers and temporary buildings for the crew on other planets.

The system uses various types of robots, including ones that resemble small worms. They can collect, repair and redistribute materials for various structures. ARMADAS uses a small set of 3D building blocks, or voxels, made from composite materials to form a structure. Robots can work in orbit, on the surface of the moon, or on other planets even before humans arrive.

The system can be remotely programmed to create different types of objects. In the experiments, the robots built a shelter of hundreds of voxels in just over four days of work. NASA noted that if the system were sent to the Moon a year before people landed there, it would have time to build 12 similar shelters. Such mini-robots will be able to charge autonomously at stations or even receive energy wirelessly.

Finally, NASA is developing a humanoid robot, Valkyrie, for future missions to Mars. It began testing in Australia in 2023 to demonstrate the robot’s autonomous capabilities as well as its ability to perform tasks in challenging environments. Valkyrie will be used by Perth-based Woodside Energy to develop care technologies for unmanned and offshore energy assets.

It is expected that the Valkyrie’s capabilities will be used in the Artemis and other missions. Thus, the robot will be able to check the operation of the equipment and provide its maintenance. In the future, it could be used in space industries that would support long-term stays of astronauts on other planets.